from bs4 import BeautifulSoup

import requests

import re

from Crypto.Cipher import AES

import os

def tsList(Index):

with open(os.getcwd()+ '/m3u8.txt','r') as f:

if '.ts' in f.read():

print('ts视频链接均已存储,无需重复请求')

else:

print('开始获取并存储ts链接')

with open(os.getcwd()+ '/m3u8.txt','r') as f:

m3u8Url = f.readlines()[0].strip()

content = requests.get(m3u8Url,headers=headers)

jiami=re.findall('#EXT-X-KEY:(.*)\n',content.text)

m3u8Url_before = ''

if len(jiami)>0:

key=str(re.findall('URI="(.*)"',jiami[0]))[2:-2]

if 'http' not in key:

m3u8Start = m3u8Url.find("\"url\":\"")+7

m3u8End = m3u8Url.find(".m3u8")+5

m3u8Url = m3u8Url[m3u8Start:m3u8End].replace('\\','')

m3u8Url_before="https://" + m3u8Url.split('/')[2]

else:

m3u8Url_before = ''

keycontent= requests.get(m3u8Url_before + key,headers).text

with open(os.getcwd()+ '/m3u8.txt','a') as f:

f.write(keycontent + '\n')

else:

with open(os.getcwd()+ '/m3u8.txt','a') as f:

f.write('000000000000')

if(content.status_code == 200):

pattern = re.compile(r'http[s]?://(?:[a-zA-Z]|[0-9]|[$-_@.&+]|[!*\(\),]|(?:%[0-9a-fA-F][0-9a-fA-F]))+')

content = content.text.split(",")

index = 0

for item in content:

if 'http' not in item:

index += 1

temp = (m3u8Url_before+item).replace("\n", "")

url = str(pattern.findall(temp))[2:-2]

with open(os.getcwd()+ '/m3u8.txt','a') as f:

f.write(url + '\n')

else:

index += 1

url = str(pattern.findall(item))[2:-2]

with open(os.getcwd()+ '/m3u8.txt','a') as f:

f.write(url + '\n')

Download(Index)

def Download(Index):

now = videoName + "_第" + str(Index+1) + '集'

index = 0

try:

with open(os.getcwd()+ '/index.txt','r') as f3:

index = int(float(f3.read()))

except FileNotFoundError as e:

index = 4

index1 = index

with open(os.getcwd()+ '/m3u8.txt','r') as getKey:

keycontent = getKey.readlines()[1][0:-1]

print(keycontent)

if keycontent == '000000000000':

print("未加密")

else:

cryptor = AES.new(keycontent.encode('utf-8'), AES.MODE_CBC, keycontent.encode('utf-8'))

with open(os.getcwd()+ '/m3u8.txt','r') as getTsUrlList:

tsList = getTsUrlList.readlines()[index:]

tsListlen = len(tsList)

for i in tsList[1:]:

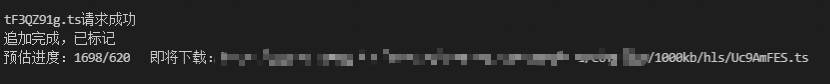

print("预估进度:" + str(index1-3) + '/' + str(tsListlen-1) + " 即将下载:" + i)

res = ''

for item in range(1,10):

try:

response = requests.get(i, headers=headers,timeout=3)

except Exception as e:

print(i[-12:-1] + '请求超时,重新请求第' + str(item) + '次')

continue

if(response.status_code == 200):

print(i[-12:-1] + '请求成功')

res = response

if keycontent == '000000000000':

print('未加密,直接追加')

cont=res.content

else:

try:

cont=cryptor.decrypt(res.content)

except:

pass

with open(os.getcwd() + '/' + now +'.mp4', 'ab+') as f:

f.write(cont)

f.close()

index1 += 1

print("追加完成,已标记")

with open(os.getcwd()+ '/index.txt','w') as f:

f.write(str(index1))

f.close()

break

else:

continue

with open(os.getcwd()+ '/jishu.txt','w') as indexFile:

indexFile.write(str(Index+1) + ' \n')

with open(os.getcwd()+ '/m3u8.txt','a') as indexFile:

indexFile.truncate(0)

with open(os.getcwd()+ '/index.txt','w') as f:

f.write('0')

f.close()

return True

def getM3u8(htmlUrl):

content = requests.get(htmlUrl,headers=headers).text

bsObj = BeautifulSoup(content,"html.parser")

index = 0

for scriptItem in bsObj.findAll("script"):

index += 1

if '.m3u8' in str(scriptItem):

pattern = re.compile(r'http[s]?://(?:[a-zA-Z]|[0-9]|[$-_@.&+]|[!*\(\),]|(?:%[0-9a-fA-F][0-9a-fA-F]))+')

m3u8Url = pattern.search(str(scriptItem)).group()

global m3u8Url_before

m3u8Url_before=getDomain(m3u8Url)

if 'hls' not in m3u8Url:

content = requests.get(m3u8Url,headers=headers).text

count = 0

nList = []

for item in list(content):

count += 1

if item == '\n':

nList.append(count)

m3u8Url_hls = str(content)[nList[-2]:nList[-1]]

if 'http' not in m3u8Url_hls:

m3u8Url_hls = m3u8Url_before + m3u8Url_hls

else:

m3u8Url_hls = m3u8Url

if 'ENDLIST' not in m3u8Url_hls:

with open(os.getcwd()+ '/m3u8.txt','w') as f:

f.write(m3u8Url_hls)

return(1)

else:

content = requests.get(m3u8Url,headers=headers).text.split(",")

with open(os.getcwd()+ '/m3u8.txt','a+') as f:

f.write('hello~~' + '\n')

with open(os.getcwd()+ '/m3u8.txt','a+') as f:

f.write('000000000000\n')

for i in content:

if '/' in i:

breakpoint = i[1:].index('\n')+1

with open(os.getcwd()+ '/m3u8.txt','a+') as f:

f.write(i[1:breakpoint] + '\n')

return (2)

else:

if index == len(list(bsObj.findAll("script"))):

print("在页面Script中未找到相关m3u8链接。。。")

return (3)

def jiexiHtml(htmlUrl):

content = requests.get(htmlUrl,headers=headers).text

bsObj = BeautifulSoup(content,"html.parser")

global videoName

videoName = bsObj.find('h1',{'class','page-title'}).text

videoItemHtml = bsObj.find('div',{'class':'scroll-content'}).findAll('a',href=re.compile("^(/ShowInfo/)((?!:).)*$"))

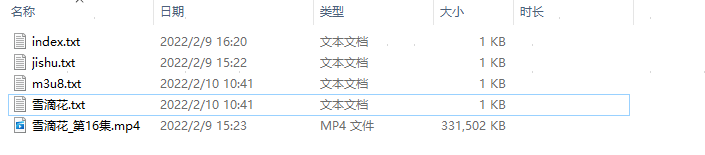

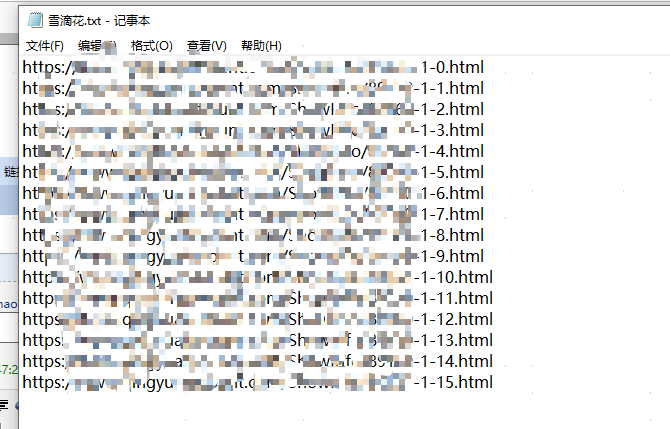

with open(os.getcwd()+ '/' +videoName+'.txt','w') as indexFile:

indexFile.truncate(0)

for i in videoItemHtml:

item = str(i.attrs['href'])

if 'http' not in item:

Domain = getDomain(htmlUrl)

with open(os.getcwd()+ '/' +videoName+'.txt','a') as indexFile:

indexFile.write(Domain + item + '\n')

else:

with open(os.getcwd()+ '/' +videoName+'.txt','a') as indexFile:

indexFile.write(item + '\n')

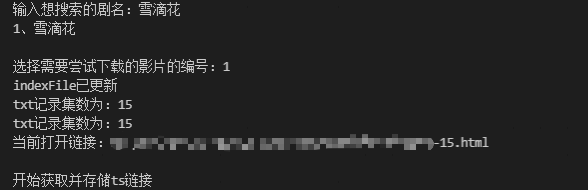

print("indexFile已更新")

try:

with open(os.getcwd()+ '/jishu.txt','r') as indexFile:

pass

except FileNotFoundError as e:

print("jishu不存在,已创建并置零")

with open(os.getcwd()+ '/jishu.txt','a') as indexFile:

indexFile.write('0 \n')

with open(os.getcwd()+ '/' +videoName+'.txt','r') as indexFile:

HtmlArr = indexFile.readlines()

with open(os.getcwd()+ '/jishu.txt','r') as indexFile:

jishu = int(indexFile.read())

print("txt记录集数为:" + str(jishu))

for htmlItem in HtmlArr[jishu:]:

with open(os.getcwd()+ '/jishu.txt','r') as indexFile:

jishuRun = int(indexFile.read())

print("txt记录集数为:" + str(jishuRun))

openHtml(htmlItem,jishuRun)

def openHtml(htmlItem,jishu):

print("当前打开链接:" + htmlItem)

pd = getM3u8(htmlItem)

if(pd == 1):

tsList(jishu)

elif (pd == 2):

if Download(jishu):

return True

else:

pass

def getDomain(htmlUrl):

pattern = re.compile(r'http[s]?://[a-zA-Z\-.0-9]+(?=\/)')

return(str(pattern.search(htmlUrl).group()))

def searchVideo(searchName):

content = requests.get('你找的影院地址',headers=headers,params={'searchword': searchName})

bsObj = BeautifulSoup(content.text,"html.parser")

VideoList = bsObj.findAll("a",{"class":"module-item-pic"})

if VideoList:

htmlUrlList = VideoList

return htmlUrlList

else:

return False

if __name__ == '__main__':

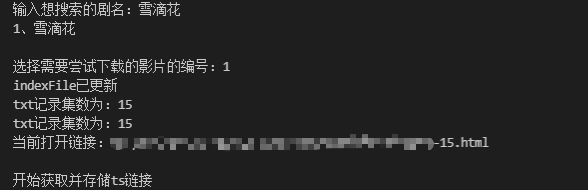

searchName = input('输入想搜索的剧名:')

global headers

headers = {

}

htmlUrlList = searchVideo(searchName)

if htmlUrlList:

li = 0

for i in htmlUrlList:

li += 1

title = i.find('img',{"class":"lazyload"}).attrs['alt']

print(str(li) + '、' + title +'\n')

else:

print("未搜索到相关影片")

try:

raise RuntimeError('testError')

except RuntimeError as e:

print("程序即将中断")

os._exit(0)

liNo = input('选择需要尝试下载的影片的编号:')

if htmlUrlList[int(liNo)-1]:

htmlUrl = htmlUrlList[int(liNo)-1].attrs['href']

if os.path.exists(os.getcwd()+ '/' + searchName):

pass

else:

os.makedirs(os.getcwd()+ '/' + searchName)

os.chdir( os.getcwd()+ '/' + searchName )

jiexiHtml('你找的影院地址' + htmlUrl)

else:

print("数值超出范围")